Dialogue summarization using zero shot, one shot and few shot inferences

Dialogue Summarization using LLMs

In this blog I have discussed about dialogue summarization using LLMs. I have explored different techniques such as zero shot , one shot and few shot inferences.

What is zero shot, few shot and one shot inference ?

Dialogue summarization is accomplished through instruction prompts on Language Models (LLMs), employing three distinct methods:

- Zero-shot inference: This method involves instruction prompting for LLMs without providing any examples.

- One-shot inference: In this approach, instruction prompting for LLMs is conducted using a single example.

- Few-shot inference: Instruction prompting is carried out by providing a small number of examples.

I have used FLAN T5 LLM from hugging face in this project. The dataset used is from hugging face called DialogSum (https://huggingface.co/datasets/knkarthick/dialogsum)

First step, Install and import all the necessary libraries such as transformers etc.

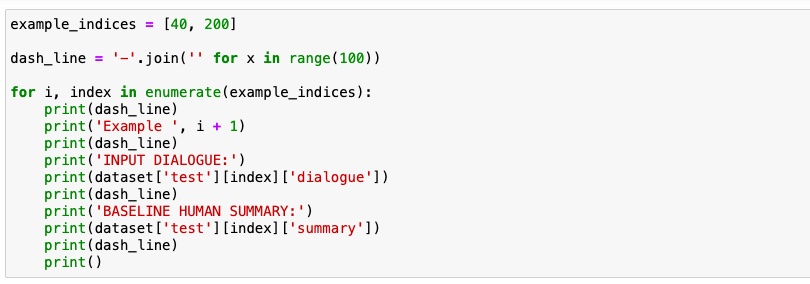

Displaying few examples from the data set having the summaries.

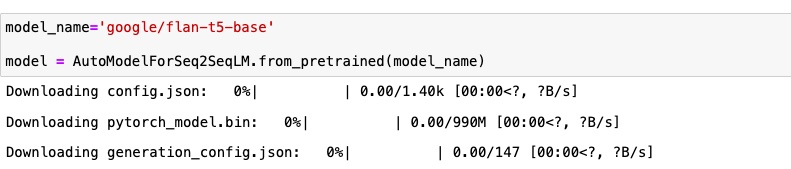

Load the Flan T5 model and create an instance using AutoModelForSeq2SeqLM.

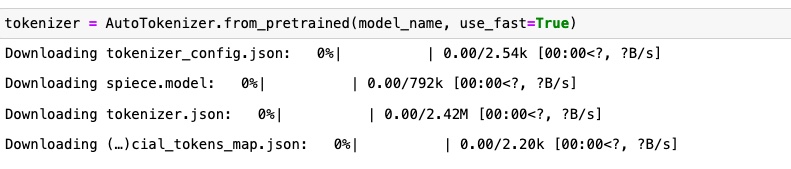

Followed by downloading the tokenizer.

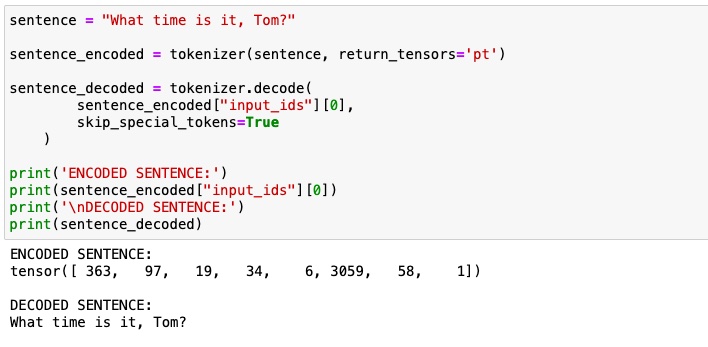

Using the tokenizer to encode and decode the sentence

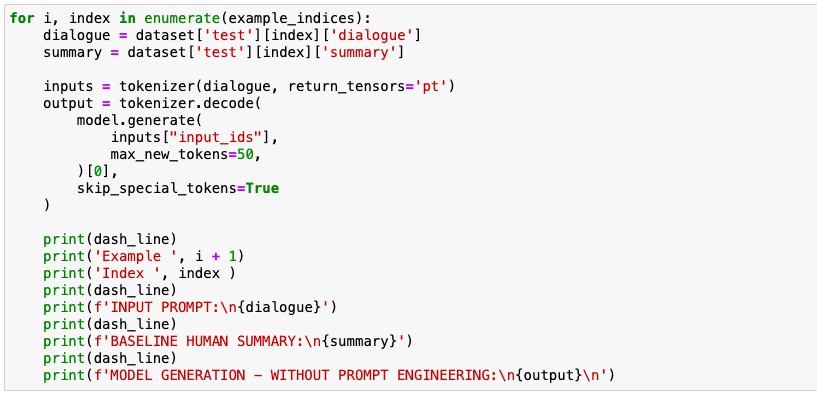

First I have tested the model performance without using any instruction prompts.

The results don’t look good. Next I have tried using instruction prompts.

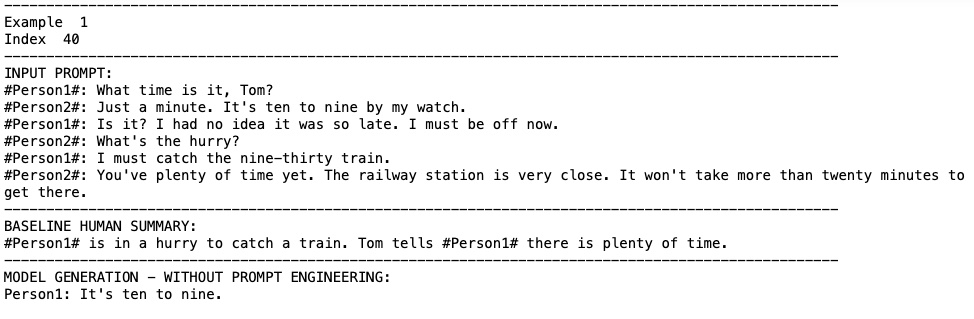

Zero shot inference

Here I have used the same block of code as before but I have added an instruction prompt to perform the dialogue summarization using a LLM. Zero shot inference is done without using any examples.

This can be experimented with any different type of prompts and see how the answers change based on changing the instructions in the prompts.

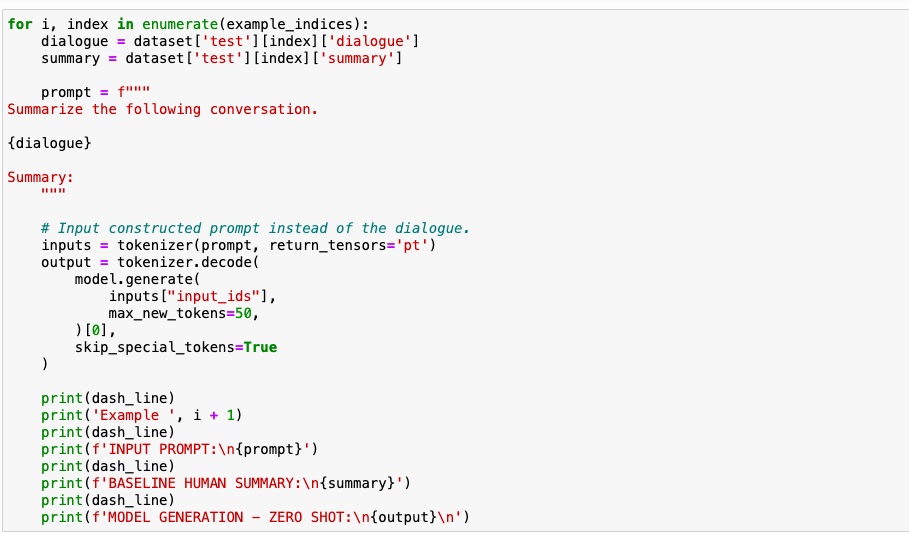

One shot inference

The below function helps create examples of prompt response pairs and has a final prompt without a response that will be generated by the model.

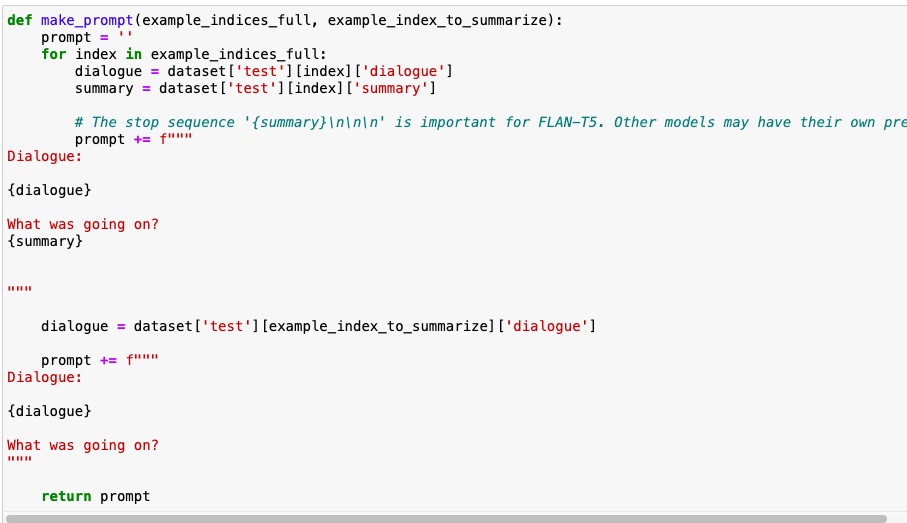

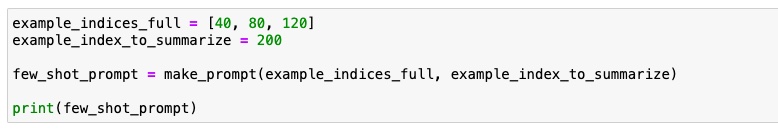

Few shot inference

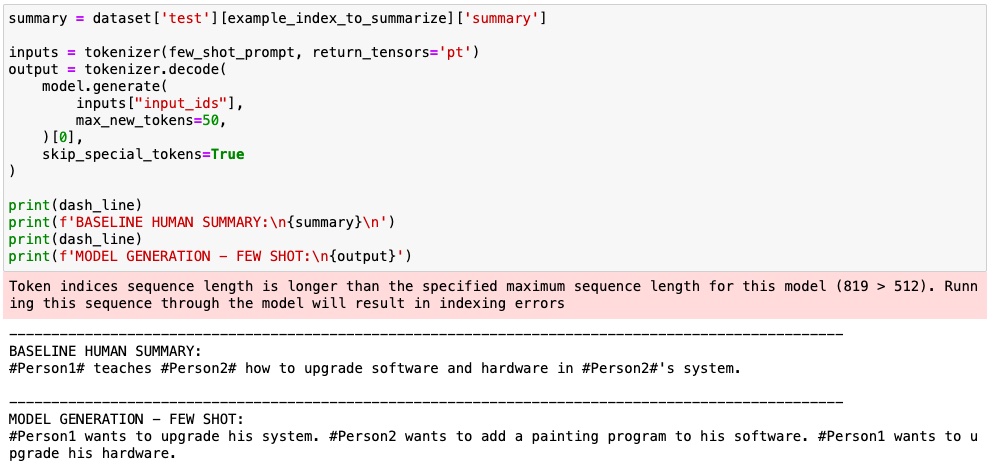

In this technique, we pass in a few examples of dialogue summary pairs and prompt the model to generate a summary for a dialogue.

By looking at the output, we see that few shot inference results are almost the same as one-shot inference results for this use case.

Conclusion :

In this article, I have covered the different types of prompt engineering techniques such as zero shot, one shot and few shot inferences. I have explained how they can be performed and the results. The full colab can be found here colab notebook

Reference :

Generative AI with LLMs , Deeplearning.AI